Enterprise Voice AI Vendor Evaluation Framework

Evaluating enterprise voice AI vendors requires assessing deployment flexibility, compliance certifications, integration capabilities, and total cost of ownership across a 3-5 year horizon.

Selecting a voice AI platform for enterprise deployment is fundamentally different from choosing a SaaS tool for a small team. The stakes are higher, the integration requirements are more complex, and the wrong choice can lock your organization into years of technical debt. This evaluation framework provides a structured approach to vendor assessment that addresses the unique requirements of large-scale voice AI deployments.

For a structured evaluation of enterprise voice AI options with on-premise deployment capability and custom SLAs, contact the Trillet Enterprise team.

Why Traditional Software Evaluation Frameworks Fall Short for Voice AI

Standard enterprise software evaluation criteria miss critical voice AI considerations. Traditional frameworks focus on features, pricing, and vendor stability, but voice AI introduces real-time performance requirements, conversational quality metrics, and deployment architecture decisions that fundamentally change the evaluation process.

Voice AI platforms must be evaluated across four dimensions that standard software assessments often overlook:

Real-time performance characteristics: Unlike batch-processing systems, voice AI operates in real-time with human callers. Latency above 2 seconds creates conversational breakdown. Response consistency matters more than peak performance.

Conversational intelligence quality: The underlying language model, prompt engineering, and conversation management architecture directly impact call outcomes. Two platforms using the same LLM can produce dramatically different results based on their implementation.

Deployment architecture flexibility: Cloud-only platforms may not meet data residency or security requirements. The ability to deploy on-premise, hybrid, or across specific geographic regions is often a hard requirement for regulated industries.

Integration depth with existing systems: Voice AI must connect to CRM, telephony, calendar, and often legacy systems. Surface-level integrations that require manual data synchronization create operational overhead that compounds over time.

The Seven Pillars of Enterprise Voice AI Evaluation

A comprehensive vendor assessment should examine seven core areas, weighted based on your organization's specific requirements and constraints.

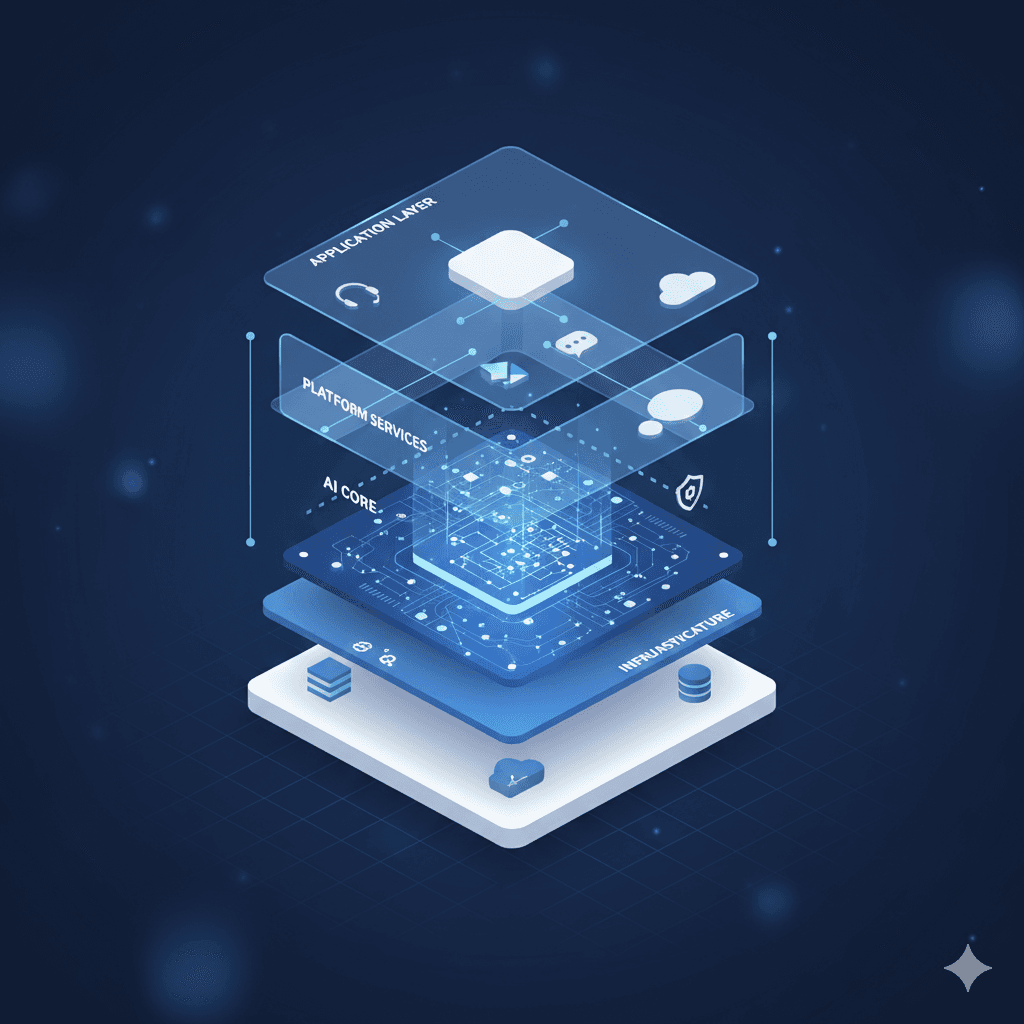

1. Deployment Architecture and Data Residency

The first evaluation criterion should be whether the vendor can actually deploy within your required architecture constraints. This is a binary filter that eliminates many vendors before detailed evaluation begins.

Key questions:

Can the platform be deployed on-premise via containerization (Docker/Kubernetes)?

What data residency options exist (APAC, North America, EMEA)?

Where is call data processed and stored?

Can you configure the system to not store sensitive data?

What happens to call recordings and transcripts?

Trillet differentiator: Trillet is the only voice application layer that supports true on-premise deployment via Docker. This enables healthcare organizations, financial institutions, and government agencies to meet strict data sovereignty requirements while still accessing enterprise-grade voice AI capabilities.

For organizations in regulated industries, deployment architecture is not negotiable. A platform that only offers multi-tenant cloud deployment cannot serve healthcare networks requiring HIPAA data segregation or financial institutions with data residency mandates.

2. Compliance and Security Certifications

Enterprise deployments require verifiable compliance certifications, not marketing claims. Request documentation and audit reports rather than accepting vendor attestations at face value.

Essential certifications by region and industry:

Requirement | US Healthcare | US Financial | Australian Enterprise | EU Organizations |

Data Protection | HIPAA BAA | GLBA, SOC 2 | Privacy Act 1988 | GDPR |

Security Framework | SOC 2 Type II | SOC 2 Type II | APRA CPS 234 | ISO 27001 |

Government | FedRAMP (if applicable) | FINRA | IRAP | ENISA |

Industry-Specific | - | PCI DSS (if payments) | ASD Essential Eight | NIS2 (2026) |

Evaluation approach:

Request copies of current SOC 2 Type II reports

Verify HIPAA Business Associate Agreement terms

Ask for penetration testing results from CREST-certified assessors

Review data processing agreements for GDPR compliance

Confirm audit frequency and most recent certification dates

Organizations that claim compliance without providing documentation should be treated with skepticism. Compliance is not a feature to be checked off but an ongoing operational requirement that requires continuous investment.

3. Service Level Agreements and Reliability

Enterprise voice AI requires financially-backed SLAs that provide meaningful recourse when service levels are not met. Marketing language like "99.9% uptime" is meaningless without contractual backing and clear definitions.

SLA evaluation criteria:

Uptime definition: How is uptime calculated? Does it exclude scheduled maintenance? What about degraded performance that still technically allows calls?

Measurement methodology: How is availability measured? Self-reported metrics are less reliable than third-party monitoring.

Financial remediation: What credits or refunds apply when SLAs are missed? A 10% credit for 1% downtime provides no real accountability.

Incident response times: What are guaranteed response times for Severity 1 (complete outage) vs Severity 2 (degraded performance) issues?

Trillet approach: Trillet Enterprise offers financially guaranteed 99.99% uptime SLAs with contract-based terms negotiated per engagement. This translates to less than 53 minutes of downtime per year, with meaningful financial remediation for any breach.

Be wary of vendors that offer "best effort" SLAs or exclude critical components from their uptime calculations. Voice AI downtime directly impacts customer experience and revenue.

4. Integration Capabilities and Technical Architecture

Enterprise deployments require deep integration with existing systems. Evaluate not just whether integrations exist, but how they are implemented and maintained.

Integration assessment areas:

CRM connectivity: Does the platform offer native integrations with your CRM (Salesforce, HubSpot, Microsoft Dynamics)? Are integrations bidirectional? Can call data, transcripts, and outcomes be automatically logged?

Telephony infrastructure: Can the platform connect to your existing PBX or SIP infrastructure? What about legacy telephony systems? Is number porting supported?

Calendar and scheduling: Native integration with Google Calendar, Outlook, and Calendly allows voice AI to check availability and book appointments in real-time.

API completeness: REST API coverage determines what can be automated and customized. Request API documentation and evaluate coverage against your requirements.

Legacy system connectivity: Many enterprises have proprietary or legacy systems that require custom integration. Evaluate the vendor's ability to build custom connectors and the associated timeline and cost.

5. Managed Service vs Self-Service Model

Enterprise voice AI implementation is not a software installation but a multi-phase project requiring expertise in conversational design, integration engineering, and ongoing optimization.

Self-service model characteristics:

Platform access with documentation

Support via ticketing or community forums

Customer responsible for implementation and optimization

Lower monthly cost, higher internal resource requirements

Managed service model characteristics:

Dedicated solution architects for implementation

Custom integrations built by vendor team

Ongoing optimization and monitoring

Higher monthly cost, minimal internal resource requirements

Trillet Enterprise: Fully managed service with zero internal engineering lift required. Trillet builds, deploys, and manages the entire voice AI implementation, including custom legacy system integrations. This model is appropriate for organizations that want to deploy voice AI without building internal expertise.

The choice between managed and self-service depends on your organization's technical capabilities and strategic priorities. If voice AI is not a core competency, managed service reduces risk and accelerates time to value.

6. Total Cost of Ownership Analysis

Vendor pricing models vary significantly, making direct comparison difficult. A comprehensive TCO analysis must account for all cost components over a 3-5 year horizon.

Cost components to evaluate:

Platform fees: Monthly or annual subscription costs, often tiered by features or scale.

Usage costs: Per-minute, per-call, or per-conversation pricing. Verify what counts as a "minute" (connection time vs conversation time).

Integration costs: One-time setup fees, ongoing integration maintenance, and custom development charges.

Implementation costs: Professional services for initial deployment, training, and configuration.

Ongoing optimization: Costs for conversational design updates, prompt engineering, and performance tuning.

Hidden costs: Overage charges, support tier upgrades, compliance add-ons (some vendors charge extra for HIPAA).

Example TCO calculation for 10,000 monthly calls:

Cost Component | Vendor A | Vendor B | Trillet Enterprise |

Platform fee | $1,200/mo | $0 | Custom contract |

Usage (10K calls x avg 3 min) | $0.15/min = $4,500 | $0.12/min = $3,600 | $0.09/min = $2,700 |

HIPAA compliance | +$200/mo | Included | Included |

Integration setup | $5,000 | $10,000 | Included |

Monthly total | $5,900 | $3,600 | $2,700+ platform |

Annual total | $75,800 | $53,200 | Custom |

Note: Trillet Enterprise pricing is contract-based and negotiated per engagement. The $0.09/minute rate applies to Agency/Enterprise tiers with volume considerations.

7. Vendor Stability and Roadmap Alignment

Enterprise voice AI deployments are multi-year commitments. Vendor stability, funding, and product roadmap alignment matter for long-term success.

Stability indicators:

Company funding history and runway

Customer count and retention rates

Named enterprise customers (with references)

Employee growth trajectory

Market position and competitive dynamics

Roadmap considerations:

Planned features relevant to your use cases

LLM provider relationships and model upgrade path

Geographic expansion plans (if you operate internationally)

Platform architecture evolution

Request conversations with existing enterprise customers in your industry. References should discuss implementation experience, ongoing support quality, and any challenges encountered.

Evaluation Process: A Structured Approach

A disciplined evaluation process reduces bias and ensures comprehensive assessment across all criteria.

Phase 1: Requirements definition (2-3 weeks)

Document functional requirements

Identify compliance and security constraints

Map integration requirements to existing systems

Define success metrics and KPIs

Phase 2: Market scan and RFI (2-3 weeks)

Identify potential vendors (typically 5-8)

Issue Request for Information

Conduct initial screening against hard requirements

Shortlist 3-4 vendors for detailed evaluation

Phase 3: Detailed evaluation and POC (4-6 weeks)

Conduct vendor demonstrations

Execute proof-of-concept with 1-2 finalists

Assess technical integration feasibility

Validate compliance documentation

Check customer references

Phase 4: Negotiation and selection (2-4 weeks)

Negotiate contract terms and SLAs

Finalize pricing and payment terms

Document implementation timeline

Execute agreement

Total timeline: 10-16 weeks for a thorough enterprise evaluation. Shortcuts in this process often lead to implementation challenges or vendor switching within 18 months.

Red Flags During Vendor Evaluation

Certain warning signs during evaluation should trigger deeper investigation or vendor elimination:

Compliance claims without documentation: Vendors who claim HIPAA compliance but cannot produce a signed BAA template or SOC 2 report should be viewed skeptically.

Lack of enterprise references: If a vendor cannot provide references from organizations similar to yours, they may not have enterprise-grade experience.

Pricing that seems too good: Significantly lower pricing often indicates hidden costs, limited support, or a product that is not ready for enterprise deployment.

Reluctance to discuss architecture: Vendors who avoid technical discussions about infrastructure, data handling, or deployment options may have limitations they prefer not to disclose.

Sales pressure to skip evaluation steps: Enterprise deals benefit vendors significantly. Pressure to accelerate the evaluation process often indicates the vendor knows a thorough evaluation would reveal weaknesses.

No professional services capability: Enterprise deployments require implementation expertise. Vendors without professional services teams expect you to figure it out yourself.

Frequently Asked Questions

How long should an enterprise voice AI evaluation take?

A thorough enterprise evaluation typically requires 10-16 weeks from requirements definition through vendor selection. Rushing this process increases the risk of selecting a vendor that cannot meet your requirements or requires replacement within 18 months.

What is the most important evaluation criterion?

Deployment architecture and data residency are often binary filters that eliminate vendors before other criteria matter. If a vendor cannot deploy within your required architecture constraints, other capabilities are irrelevant.

What is the most important factor in enterprise voice AI vendor selection?

Deployment architecture and data residency are often binary filters that eliminate vendors before other criteria matter. If a vendor cannot deploy within your required architecture constraints, other capabilities are irrelevant. Contact Trillet Enterprise to discuss your specific deployment requirements.

Should we prioritize managed service or self-service platforms?

This depends on your internal capabilities and strategic priorities. Organizations without dedicated voice AI expertise benefit from managed service models that reduce implementation risk. Organizations with strong engineering teams may prefer self-service platforms that offer more control and customization.

How do we evaluate voice AI quality during a POC?

Effective POC evaluation requires testing with realistic call scenarios, measuring latency under load, and assessing conversation quality through call recordings. Establish success criteria before the POC begins, including target metrics for call completion rates, customer satisfaction, and technical performance.

What compliance certifications are non-negotiable for healthcare deployments?

Healthcare organizations in the US require HIPAA compliance with a signed Business Associate Agreement. Additionally, SOC 2 Type II certification provides assurance that security controls are operating effectively. Australian healthcare deployments should also consider Privacy Act 1988 requirements and state-specific health records legislation.

Conclusion

Enterprise voice AI vendor evaluation requires a structured approach that addresses deployment architecture, compliance requirements, integration capabilities, service model, total cost of ownership, and vendor stability. The evaluation framework presented here provides a foundation that should be customized based on your organization's specific requirements and constraints.

For organizations that require on-premise deployment, data residency controls, or zero internal engineering lift, Trillet Enterprise offers a fully managed voice AI service with the only Docker-based on-premise deployment option in the market. Contact the enterprise team to discuss your specific requirements and receive a custom evaluation.

Related Resources:

Enterprise Voice AI Orchestration Guide - Comprehensive guide for large organization deployments

On-Premise Voice AI Deployment via Docker - Deployment architecture pillar

Voice AI 99.99% Uptime SLA Requirements - SLA evaluation pillar

Voice AI Legacy System Integration Approaches - Integration capabilities pillar

Managed vs Self-Serve Voice AI Platforms Comparison - Detailed analysis of service models

Voice AI for Financial Services Compliance - SOC 2 and GLBA requirements

The Return of On-Premise: Why Enterprises Are Rethinking Cloud-Only Voice AI - Deployment architecture analysis