Voice AI for Financial Services Compliance: SOC 2 and GLBA Requirements in 2026

Financial institutions implementing voice AI must meet SOC 2 Type II and GLBA compliance requirements, including data encryption, access controls, audit logging, and customer data protection safeguards.

Voice AI deployment in financial services faces unique regulatory scrutiny. Unlike other industries where convenience drives adoption, banks, credit unions, wealth management firms, and insurance companies must demonstrate that their AI systems meet the same security and privacy standards as their core banking infrastructure. This article examines the specific compliance requirements financial institutions face when implementing voice AI, and how leading platforms address these challenges.

For managed voice AI deployment with SOC 2 Type II certification, GLBA-compliant data handling, and on-premise deployment options, contact the Trillet Enterprise team.

What Compliance Standards Apply to Voice AI in Financial Services?

Financial institutions deploying voice AI must typically satisfy three overlapping compliance frameworks: SOC 2 Type II, GLBA, and often state-specific regulations.

SOC 2 Type II evaluates five trust service criteria: security, availability, processing integrity, confidentiality, and privacy. For voice AI, this means demonstrating that call recordings, transcripts, and customer data are protected throughout their lifecycle. Unlike SOC 2 Type I (a point-in-time assessment), Type II requires sustained compliance over 6-12 months of auditor observation.

GLBA (Gramm-Leach-Bliley Act) mandates that financial institutions protect the security and confidentiality of customer information. The Safeguards Rule specifically requires:

Designation of a qualified individual to oversee information security

Risk assessments for customer information handling

Implementation of safeguards based on risk assessment findings

Regular testing and monitoring of safeguards

Staff training on security practices

Oversight of service providers

State-specific regulations add complexity. New York's DFS Cybersecurity Regulation (23 NYCRR 500), for example, requires multi-factor authentication for systems accessing nonpublic information, penetration testing, and encryption of data both in transit and at rest.

How Does Voice AI Create Compliance Risk for Financial Institutions?

Voice AI systems introduce compliance exposure at multiple points in the data flow.

Call recording storage presents the most obvious risk. Voice AI platforms typically record calls for quality assurance and training purposes. Under GLBA, these recordings become "customer information" requiring protection. Under SOC 2, their storage and access must meet confidentiality criteria.

Transcript generation creates additional exposure. When voice AI converts speech to text for processing, it generates written records of potentially sensitive conversations. A customer providing account numbers, Social Security digits, or discussing financial difficulties creates a transcript that requires the same protection as a written document containing that information.

Third-party data transmission raises vendor management concerns. Most voice AI platforms route calls through cloud infrastructure, potentially crossing multiple jurisdictions. GLBA's service provider oversight requirements mean financial institutions must contractually bind their voice AI vendors to security standards and verify compliance.

AI model training introduces a less obvious risk. Some voice AI platforms use customer interactions to improve their models. For financial institutions, this could mean customer data enters training datasets potentially accessible to the vendor's employees or other customers using the same platform.

Compliance Risk | GLBA Requirement | SOC 2 Criterion | Mitigation |

Call recording storage | Safeguards Rule | Confidentiality | Encryption at rest, access controls |

Transcript generation | Customer information protection | Processing integrity | PII redaction, data minimization |

Third-party transmission | Service provider oversight | Security | Contractual obligations, audits |

AI model training | Information security program | Privacy | Opt-out of training, data isolation |

What Technical Controls Do Compliant Voice AI Platforms Provide?

Platforms designed for financial services typically implement specific technical controls addressing SOC 2 and GLBA requirements.

Encryption requirements differ by standard. SOC 2 expects encryption "appropriate to the sensitivity of the data." GLBA's Safeguards Rule requires encryption when "technically feasible." In practice, compliant voice AI platforms implement:

TLS 1.3 for data in transit

AES-256 encryption for data at rest

End-to-end encryption options where call content is encrypted from capture to storage

Access controls must support the principle of least privilege. This means:

Role-based access to call recordings and transcripts

Multi-factor authentication for administrative access

Audit logging of all data access

Time-limited access tokens for API calls

Data residency matters for institutions with geographic restrictions. Some regulations require customer data to remain within specific jurisdictions. Compliant platforms offer configurable data residency, allowing financial institutions to specify that data remains in particular regions (e.g., United States only, or within the EU for GDPR overlap).

PII handling options range from basic to sophisticated. Basic platforms store everything. More compliance-focused platforms offer:

Real-time PII detection and redaction from transcripts

Option to not store call recordings after processing

Automatic deletion schedules aligned with retention policies

Data isolation between customers (multi-tenant architecture with logical separation)

How Do On-Premise Deployment Options Change the Compliance Equation?

For financial institutions with strict security policies, cloud-only voice AI platforms may be non-starters regardless of their compliance certifications.

On-premise deployment fundamentally changes the compliance calculation. When the voice AI application runs within the institution's own infrastructure, the institution controls:

Physical security of hardware

Network segmentation and firewall rules

Data backup and disaster recovery

Access to production systems

Audit log retention and access

This control significantly simplifies vendor management under GLBA. Rather than relying on the vendor's SOC 2 report to demonstrate security, the institution applies its own security program to the voice AI system.

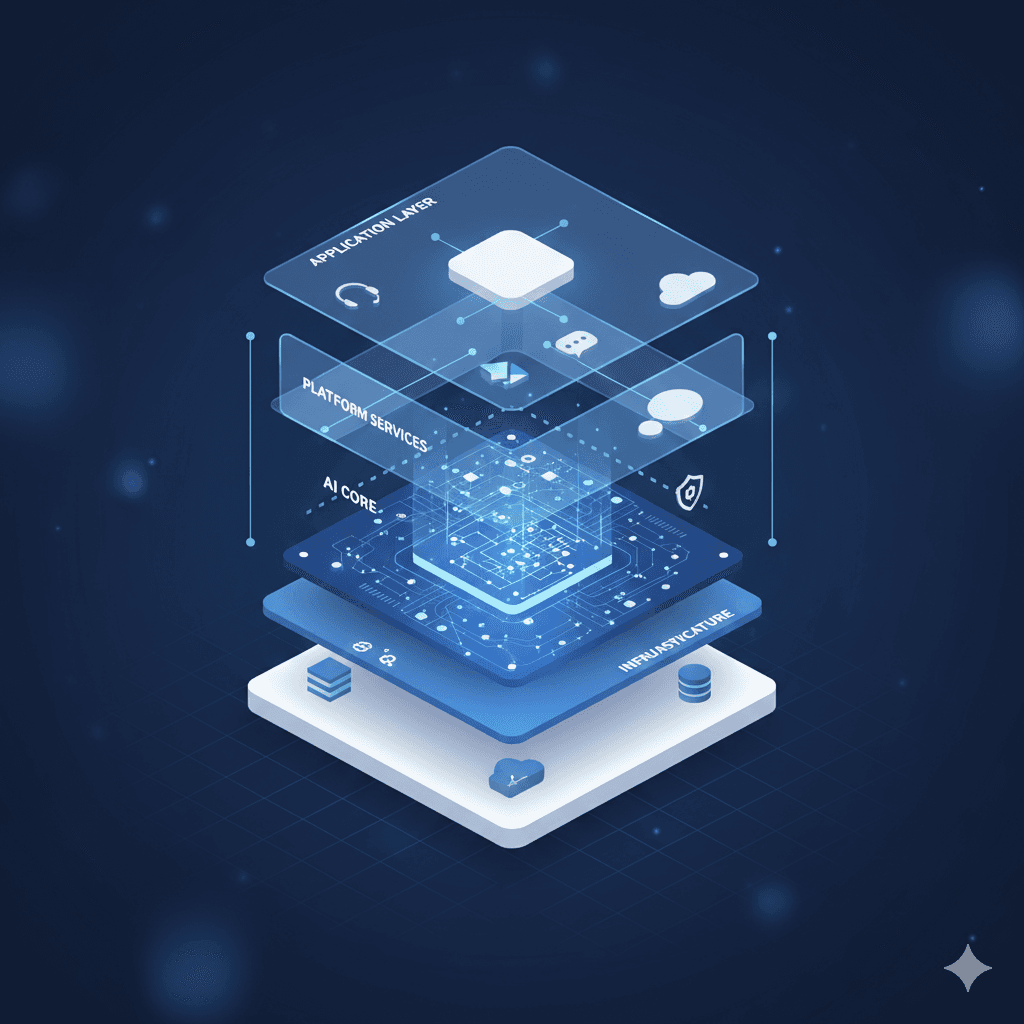

Trillet's enterprise offering provides Docker-based on-premise deployment, making it the only voice application layer that can be fully hosted within a financial institution's own data center. This architecture allows compliance teams to treat the voice AI system as an internal application subject to existing security controls, rather than a third-party service requiring extensive vendor management.

The trade-off is operational complexity. On-premise deployment requires internal resources for:

Infrastructure provisioning and maintenance

Security patching and updates

Performance monitoring

Capacity planning

Financial institutions must weigh this operational overhead against the compliance simplification of controlling their own infrastructure.

What Should Financial Institutions Evaluate When Selecting Voice AI Platforms?

Due diligence for voice AI vendors should address specific compliance requirements.

Documentation requests should include:

Current SOC 2 Type II report (not Type I)

Data processing agreement with GLBA-compliant terms

Subprocessor list identifying all parties with data access

Incident response procedures and notification timelines

Data retention and deletion capabilities

Technical evaluation should verify:

Encryption implementation (algorithms, key management)

Access control granularity

Audit log completeness and retention

API security (authentication, rate limiting, input validation)

Data residency options

PII handling capabilities

Contractual requirements should cover:

Right to audit or receive audit reports

Data breach notification timelines (72 hours or less)

Data deletion upon contract termination

Prohibition on using customer data for AI training without consent

Indemnification for compliance failures

Evaluation Criterion | Minimum Acceptable | Preferred |

SOC 2 certification | Type II current | Type II with clean opinion |

Encryption at rest | AES-256 | AES-256 with customer-managed keys |

Data residency | US-only option | Configurable by region |

PII handling | Manual redaction | Automated real-time redaction |

Deployment model | Cloud with contractual controls | On-premise option available |

Audit logs | 90-day retention | Configurable retention, export capability |

Frequently Asked Questions

Is SOC 2 Type II certification sufficient for GLBA compliance?

SOC 2 Type II demonstrates that a vendor has implemented security controls, but it does not directly address GLBA requirements. Financial institutions must still conduct their own risk assessment, ensure contractual protections are in place, and verify that the vendor's controls align with the institution's specific GLBA compliance program. SOC 2 reports provide evidence for vendor oversight but do not substitute for it.

Can financial institutions use voice AI for customer authentication?

Yes, but with appropriate controls. Voice AI can collect information for authentication (account numbers, security questions) but should implement PII redaction in transcripts and avoid storing sensitive authentication data beyond the call session. Some platforms support voice biometrics for authentication, which introduces additional privacy considerations under GLBA and state biometric privacy laws.

How do I evaluate voice AI vendors for financial services compliance?

Request SOC 2 Type II reports, verify GLBA-compliant data processing agreements, and confirm on-premise deployment options if required by your security policies. Contact Trillet Enterprise for compliance documentation and a custom assessment of your regulatory requirements.

How long does SOC 2 Type II certification take for a voice AI vendor?

SOC 2 Type II requires 6-12 months of operational evidence after controls are implemented. Financial institutions evaluating vendors without current Type II certification should expect at least 12-18 months before certification is available. Vendors offering only Type I certification are demonstrating control design but not operational effectiveness.

What happens to voice AI data if the vendor relationship ends?

GLBA requires financial institutions to ensure customer information is properly handled upon contract termination. Compliant voice AI contracts should specify data return or deletion timelines (typically 30-90 days), certification of deletion, and continued confidentiality obligations post-termination. On-premise deployments simplify this since the institution controls data deletion directly.

Conclusion

Financial services compliance adds meaningful complexity to voice AI deployment, but the requirements are well understood and addressable with appropriate platform selection and contractual controls. Institutions should prioritize vendors with current SOC 2 Type II certification, configurable data residency, robust PII handling, and clear contractual terms addressing GLBA requirements.

For organizations where vendor management overhead or cloud deployment restrictions create barriers, on-premise deployment options provide an alternative path to voice AI adoption while maintaining direct control over compliance-critical infrastructure. Trillet Enterprise offers the only Docker-based on-premise voice AI deployment, enabling financial institutions to implement voice AI within their existing security program rather than building vendor oversight processes around cloud services.

Related Resources:

Voice AI for Regulated Industries - Healthcare, finance, and government compliance overview

Voice AI for Australian Enterprises: APRA CPS 234 and IRAP Compliance - Australian financial services requirements

The Return of On-Premise: Why Enterprises Are Rethinking Cloud-Only Voice AI