Voice AI Legacy System Integration Approaches

Enterprise voice AI integration requires connecting modern AI platforms to existing telephony, CRM, and ERP systems without disrupting operations or requiring costly rip-and-replace migrations.

Organizations with decades of infrastructure investment face a fundamental challenge: how do you deploy cutting-edge voice AI when your call center runs on 15-year-old Avaya switches, your customer data lives in an on-premise Salesforce instance from 2012, and your telephony provider still uses ISDN trunks? The answer lies in choosing integration approaches that work with your existing architecture rather than against it.

For fully managed voice AI deployment with custom legacy system integrations included at no additional cost, contact the Trillet Enterprise team.

Why Legacy Integration Matters for Voice AI Deployments

Failed voice AI implementations most commonly result from integration failures, not AI capability limitations. A 2024 Gartner study found that 67% of enterprise voice AI projects that missed their deployment targets cited integration complexity as the primary cause.

The challenge compounds across multiple dimensions:

Telephony infrastructure: PBX systems, ViciDial/Asterisk dialers, SIP trunks, ISDN lines, and proprietary call routing

Customer data systems: CRMs, ERPs, customer databases, and data warehouses

Authentication and identity: SSO providers, Active Directory, and role-based access controls

Compliance systems: Call recording, quality monitoring, and regulatory reporting tools

Workflow automation: Business process management systems and orchestration platforms

Each integration point introduces potential failure modes, latency, and maintenance overhead. The most successful enterprise deployments prioritize integration architecture from day one.

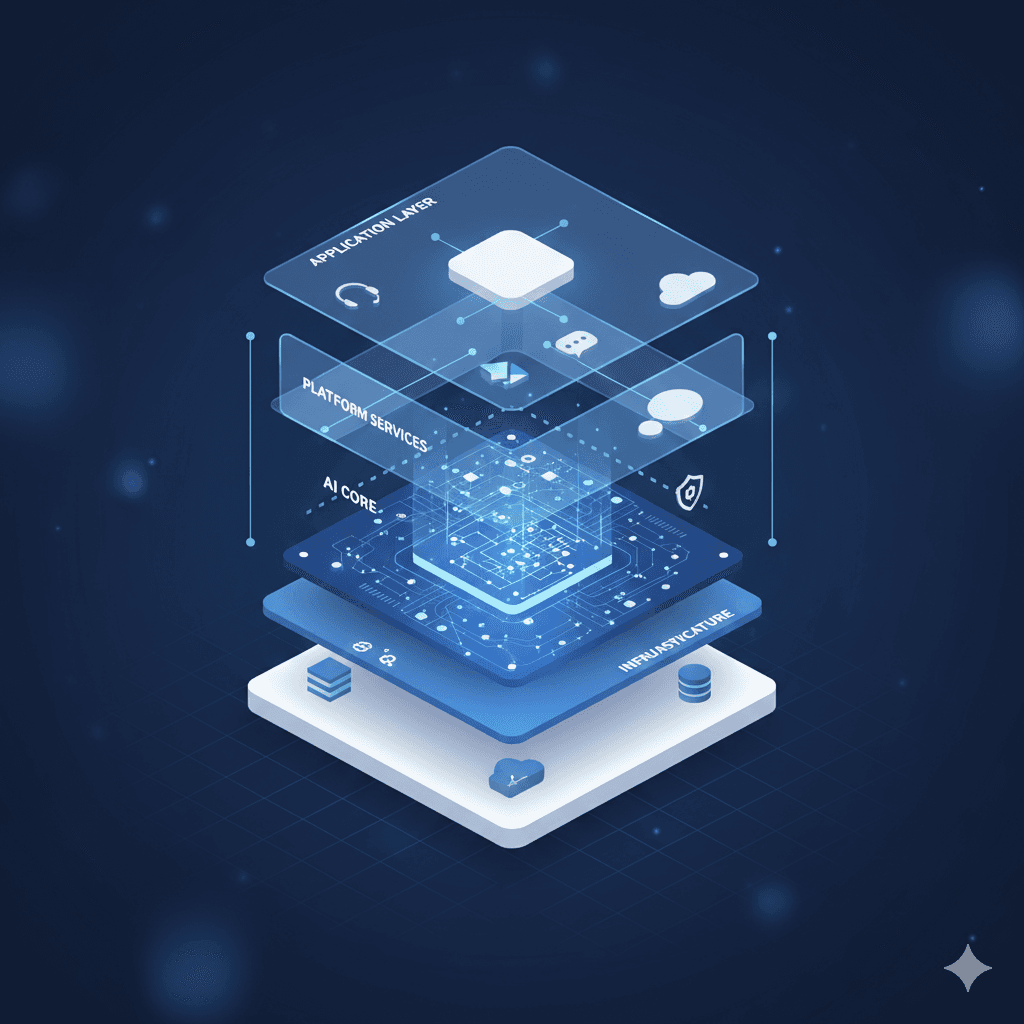

Four Core Integration Patterns for Enterprise Voice AI

Enterprise voice AI integrations typically follow one of four architectural patterns, each with distinct trade-offs for latency, maintenance, and flexibility.

Pattern 1: Direct API Integration

Direct API integration connects voice AI platforms directly to backend systems through REST or GraphQL APIs. This approach offers the lowest latency and tightest coupling but requires available API endpoints on legacy systems.

Best for: Organizations with modern API gateways, microservices architectures, or API-enabled legacy systems

Latency impact: Minimal (50-150ms per API call)

Implementation complexity: Low to moderate

Trade-offs: Requires existing API infrastructure; changes to backend systems may break integrations; tight coupling reduces flexibility

For organizations with Salesforce, HubSpot, or modern CRM platforms, direct API integration typically provides the cleanest path. The voice AI platform authenticates via OAuth 2.0, retrieves customer context in real-time, and writes conversation data back to the CRM without intermediate systems.

Pattern 2: Middleware/Integration Platform as a Service (iPaaS)

iPaaS solutions like MuleSoft, Boomi, or Workato sit between voice AI platforms and legacy systems, handling protocol translation, data mapping, and orchestration.

Best for: Organizations with heterogeneous systems, complex data transformations, or multiple integration targets

Latency impact: Moderate (100-300ms added per hop)

Implementation complexity: Moderate to high

Trade-offs: Adds another system to maintain; introduces additional failure points; enables complex workflows and data transformations

This pattern excels when legacy systems expose non-standard interfaces. A voice AI platform might need to query an AS/400-based inventory system, a Siebel CRM, and a custom-built order management system in a single conversation. iPaaS handles the protocol differences (SOAP, proprietary APIs, file-based interfaces) while presenting a unified interface to the voice AI layer.

Pattern 3: Database-Level Integration

Database-level integration bypasses application APIs entirely, reading from and writing to backend databases directly through secure database connections or CDC (Change Data Capture) streams.

Best for: Legacy systems without APIs; high-volume data synchronization; real-time event streaming

Latency impact: Low for reads (20-100ms); variable for writes depending on transaction handling

Implementation complexity: High

Trade-offs: Bypasses business logic in application layer; requires deep knowledge of database schemas; can create data integrity risks

This approach works when legacy applications lack APIs but database access is available. A voice AI system might read customer history directly from an Oracle database powering a 20-year-old billing system. However, write operations require careful handling to avoid bypassing validation rules and business logic that exist only in the application layer.

Pattern 4: Event-Driven Architecture

Event-driven integration uses message queues (Kafka, RabbitMQ, AWS SQS) to decouple voice AI platforms from backend systems. The voice AI publishes events (call started, appointment booked, escalation requested) while backend systems subscribe and react asynchronously.

Best for: High-scale deployments; systems requiring loose coupling; organizations with existing event infrastructure

Latency impact: Variable (near-real-time to seconds depending on consumer processing)

Implementation complexity: High initial setup; lower ongoing maintenance

Trade-offs: Eventual consistency rather than immediate; requires event schema governance; debugging distributed systems is complex

Event-driven patterns suit organizations processing thousands of concurrent calls where synchronous API calls would create bottlenecks. The voice AI handles the conversation while backend updates happen asynchronously, accepting eventual consistency in exchange for scalability.

Telephony Integration: The Foundation Layer

Voice AI cannot function without telephony integration. The approach depends heavily on existing infrastructure.

SIP Trunk Integration

Modern cloud PBX systems and SIP-enabled on-premise systems integrate most cleanly. The voice AI platform registers as a SIP endpoint, receiving calls directly or through call forwarding rules.

Requirements: SIP-compliant PBX or carrier; firewall rules allowing SIP/RTP traffic; codec compatibility (G.711, Opus)

Latency: Minimal when properly configured (sub-50ms for call setup)

Trillet approach: Native SIP trunk support with automatic codec negotiation; can integrate with existing Twilio, Vonage, or carrier SIP infrastructure

Legacy PBX Integration

Older Avaya, Cisco, or Mitel PBX systems may require CTI (Computer Telephony Integration) middleware or gateway devices that bridge TDM/ISDN infrastructure to SIP.

Options:

Hardware media gateways (AudioCodes, Sangoma) converting TDM to SIP

Softphone integrations via CTI APIs

Call forwarding to cloud-based voice AI numbers

Trade-offs: Gateway hardware adds latency (typically 20-50ms); CTI integrations may have licensing costs; call forwarding loses some call metadata

Contact Center Platform Integration

Enterprise contact centers running Genesys, Five9, NICE, or Amazon Connect have their own integration patterns. Most support webhook-based routing or APIs that allow voice AI to handle specific call types before human escalation.

Integration points:

Pre-routing: Voice AI handles calls before they enter the contact center queue

Overflow handling: Route to voice AI when queue times exceed thresholds

After-hours: Voice AI takes all calls outside business hours

Triage: Voice AI qualifies and routes calls to appropriate queues

ViciDial and Asterisk-Based Dialer Integration

ViciDial represents one of the most widely deployed open-source contact center platforms, yet most voice AI vendors offer no integration path. For call centers running ViciDial, this creates a binary choice: abandon working infrastructure or forgo voice AI.

Trillet Enterprise maintains production-proven ViciDial integration as a core capability, enabling voice AI deployment without infrastructure replacement.

Integration architecture:

AGI (Asterisk Gateway Interface): Voice AI intercepts calls during IVR flows

AMI (Asterisk Manager Interface): Full call control, transfer, and queue management

Database integration: Direct MySQL access for lead data and dispositions

Agent screen pop: Push caller context to agent screens before transfer

Use cases:

Inbound call screening and qualification

Outbound campaign augmentation (AI handles voicemails, gatekeepers)

After-hours coverage with seamless agent handoff

Lead qualification before human agent connection

Trillet approach: Native AGI/AMI integration with ViciDial; production deployments handling call center workloads; zero configuration changes to existing ViciDial setup

CRM and Customer Data Integration

Voice AI derives its value from context. Without access to customer data, every caller is a stranger and every conversation starts from zero.

Real-Time Customer Lookup

When a call arrives, the voice AI should retrieve customer context within milliseconds. This requires:

Caller ID matching: ANI/DNIS to customer record lookup

Account context: Recent orders, open tickets, account status

Conversation history: Previous interactions across channels

Personalization data: Preferences, communication history, VIP status

Latency requirements: Customer lookup must complete before the AI begins speaking (typically under 500ms total including greeting)

Trillet approach: Pre-built connectors for Salesforce, HubSpot, Zoho, and custom CRM systems; real-time lookup via API with configurable caching

Write-Back and Synchronization

Post-call, voice AI should update CRM records with:

Call summary and transcript

Actions taken (appointments scheduled, tickets created)

Customer sentiment and intent classification

Follow-up tasks for human agents

Synchronization patterns:

Synchronous: Write immediately after call; higher latency but immediate consistency

Asynchronous: Queue writes for batch processing; lower latency but delayed updates

Hybrid: Critical data (appointments) synchronous; supplementary data (transcripts) asynchronous

Authentication and Identity Integration

Enterprise voice AI must respect existing identity and access controls.

SSO Integration

Voice AI admin portals should integrate with enterprise SSO providers (Okta, Azure AD, Ping Identity) via SAML 2.0 or OIDC. This ensures:

Centralized user provisioning and deprovisioning

Consistent authentication policies (MFA, password requirements)

Audit trails in existing identity systems

Role-based access inherited from directory groups

Caller Authentication

For sensitive transactions, voice AI may need to authenticate callers beyond ANI matching:

Knowledge-based authentication: Date of birth, last four of SSN, account number

Voice biometrics: Voiceprint matching against enrolled samples

Out-of-band verification: SMS or email codes during the call

Warm transfer: Hand off to human agents for high-security transactions

Common Integration Pitfalls and How to Avoid Them

Enterprise voice AI integrations fail in predictable ways. Understanding these patterns helps avoid them.

Pitfall 1: Underestimating Latency Budgets

Voice conversations have strict latency requirements. Humans perceive delays over 300ms as unnatural pauses. If your integration chain adds 500ms of latency (API gateway + middleware + database query + response formatting), the AI will seem slow and unresponsive.

Solution: Map every integration hop and its latency contribution. Implement aggressive caching for data that changes infrequently. Use async patterns for non-blocking operations.

Pitfall 2: Ignoring Error Handling

When integrations fail mid-call, the voice AI needs graceful degradation paths. A CRM timeout should not crash the call.

Solution: Define fallback behaviors for every integration point. If customer lookup fails, proceed with basic call handling and retry in background. Log failures for operational visibility.

Pitfall 3: Overlooking Data Mapping Complexity

Legacy systems have idiosyncratic data models. Customer names split across three fields. Dates in proprietary formats. Status codes that mean different things in different contexts.

Solution: Build comprehensive data mapping documentation before integration begins. Allocate time for edge cases. Plan for ongoing mapping maintenance as source systems change.

Pitfall 4: Treating Integration as a One-Time Project

Backend systems change. APIs get versioned. Database schemas evolve. Integration is ongoing maintenance, not a one-time implementation.

Solution: Build monitoring and alerting for integration health. Version your integration configurations. Plan for quarterly integration reviews.

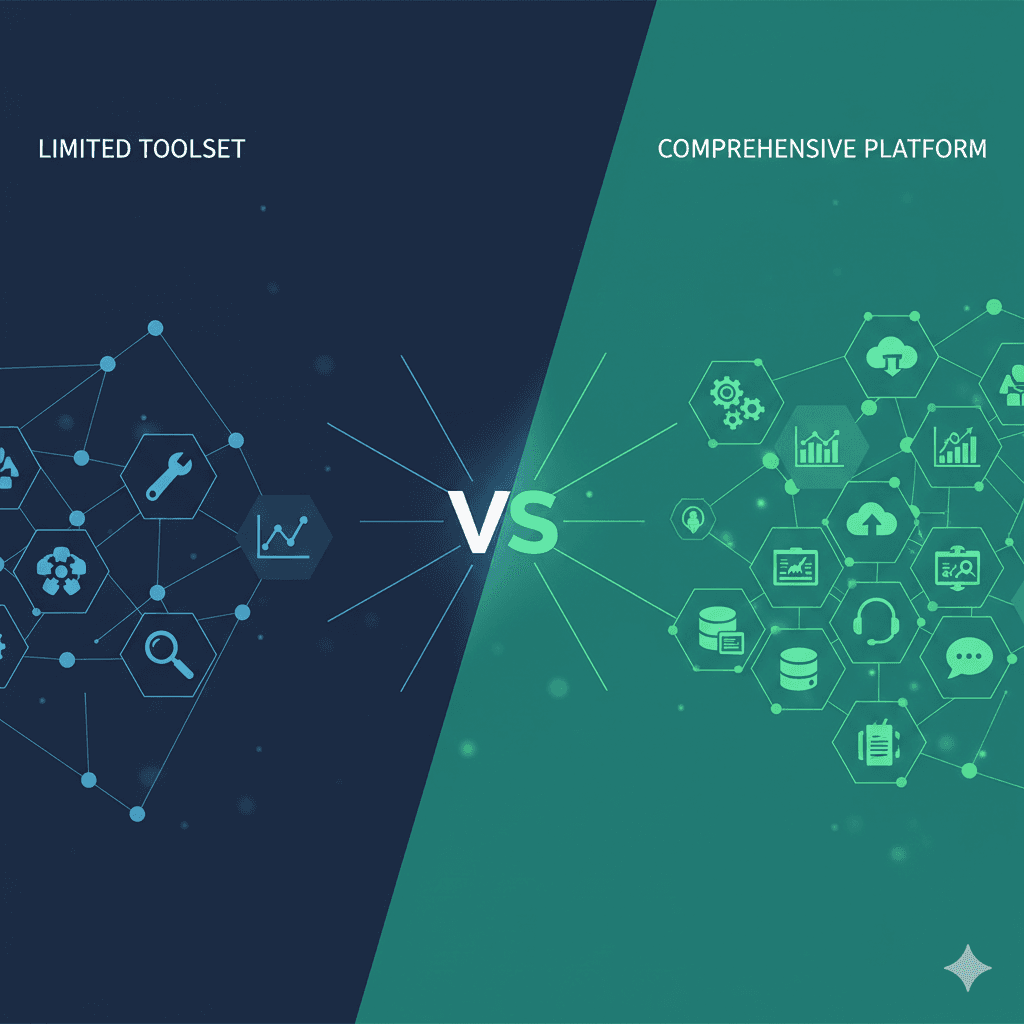

Managed Service vs. Self-Build: Integration Considerations

Organizations face a fundamental choice: build integration infrastructure internally or rely on managed services.

Self-Build Approach

Advantages:

Complete control over integration architecture

No external dependencies for critical systems

Potentially lower long-term costs at scale

Disadvantages:

Requires dedicated engineering resources

Internal team must maintain expertise across voice AI, telephony, and all integrated systems

Longer time to deployment

Integration maintenance becomes permanent overhead

Managed Service Approach

Advantages:

Vendor handles integration complexity

Faster deployment (weeks vs. months)

Integration maintenance included in service

Access to pre-built connectors and proven patterns

Disadvantages:

Less control over integration architecture

Dependency on vendor capabilities

May require compromises to fit standard patterns

Trillet approach: Fully managed enterprise service includes custom legacy integrations. Trillet's solution architects design integration architecture; Trillet engineering builds and maintains connections. Zero internal engineering lift required.

Comparison: Integration Capabilities Across Voice AI Platforms

Capability | Trillet Enterprise | Typical Cloud Platform | DIY (Retell/Vapi) |

Pre-built CRM connectors | Salesforce, HubSpot, Zoho, custom | Limited selection | None (build yourself) |

ViciDial/Asterisk integration | Production-proven (AGI/AMI) | Not supported | Custom development |

Legacy PBX support | Full (SIP, CTI, gateway) | SIP only | SIP only |

Custom integration development | Included in service | Professional services ($$$) | Your engineering team |

Integration maintenance | Managed 24/7 | Self-service | Self-service |

iPaaS compatibility | Native MuleSoft, Workato support | Varies | API-level only |

Database-level integration | Available for complex cases | Not offered | Your engineering team |

Frequently Asked Questions

How long does enterprise voice AI integration typically take?

Timeline depends on integration complexity. Simple SIP + CRM integrations deploy in 2-4 weeks. Complex multi-system integrations with legacy PBX and custom databases typically require 6-8 weeks. Trillet's managed service handles all integration work within these timelines.

Can voice AI integrate with legacy systems that have no APIs?

Yes, through database-level integration, file-based interfaces, or screen-scraping for systems with only terminal interfaces. These approaches require deeper technical expertise but are feasible for most legacy systems. Trillet Enterprise includes custom integration development for systems without standard APIs.

How long does it take to integrate voice AI with legacy systems?

Timeline depends on integration complexity. Simple SIP + CRM integrations deploy in 2-4 weeks. Complex multi-system integrations with legacy PBX and custom databases typically require 6-8 weeks. Contact Trillet Enterprise for an assessment of your specific integration requirements.

What happens when an integration fails during a call?

Properly architected voice AI systems have fallback behaviors for every integration point. If CRM lookup fails, the AI proceeds with the call using available information and retries data retrieval in the background. Critical failures (telephony down) route to backup systems or human agents. Trillet Enterprise includes 24/7 monitoring with automatic alerting for integration issues.

How do you handle data security for legacy system connections?

Integration connections should use encrypted channels (TLS 1.3 for APIs, encrypted database connections). Credentials should be stored in secrets management systems, not code. Data in transit and at rest should meet your compliance requirements. Trillet Enterprise supports private connectivity (VPN, Direct Connect) for sensitive integrations and configurable data residency.

Conclusion

Legacy system integration remains the critical success factor for enterprise voice AI deployments. The right integration architecture balances latency, maintainability, and flexibility while working within your existing infrastructure constraints.

For organizations without dedicated voice AI engineering resources, managed services that include integration development and maintenance offer the fastest path to deployment. Trillet Enterprise provides fully managed integration services with zero internal engineering lift, handling everything from SIP trunk configuration to custom legacy system connections.

Explore Trillet Enterprise for managed voice AI deployment with custom integration services, or review the Enterprise Voice AI Orchestration Guide for comprehensive deployment planning.

Related Resources: