The cloud-first era isn't over - but for voice AI, it's getting more nuanced.

For the past decade, the enterprise playbook was simple: move everything to the cloud. It made sense. Cloud infrastructure offered speed, scale, and freedom from managing physical hardware. For most workloads, that logic still holds.

But something has shifted. Across regulated industries - finance, healthcare, legal, government - we're seeing a quiet pullback. Not a rejection of cloud, but a more selective approach. Certain workloads are coming back on-premise, or never leaving in the first place.

Voice AI is one of them.

Voice Data Is Different

Most enterprise data is sensitive. But voice data sits in a category of its own.

When a customer calls your contact centre, that conversation contains personally identifiable information, account details, health information, legal admissions - sometimes all in a single call. The audio itself is biometric data. Transcripts become permanent records. Sentiment analysis reveals emotional states.

For companies operating in regulated industries, every one of these data points triggers compliance obligations. And for companies operating across jurisdictions, those obligations multiply.

This is where cloud-only voice AI starts to create friction.

The Dual-Jurisdiction Problem

Consider an Australian-headquartered company with US operations. They're subject to APRA's CPS 234 requirements in Australia, which mandate strict controls over information assets held by third parties. Simultaneously, they need to meet US customer expectations and potentially HIPAA, SOC 2, or state-level privacy requirements.

A cloud-hosted voice AI platform creates immediate questions: Where is the data processed? Where is it stored? Which jurisdiction's laws apply? Can the vendor's employees access it? What happens to call recordings?

For some organisations, the answer is straightforward - they pick a region-specific cloud instance and move on. But for many, particularly those with strict board-level data governance requirements or regulatory obligations that demand data never leave controlled infrastructure, cloud-only simply doesn't work.

These companies aren't anti-cloud. They're pro-control.

On-Premise in 2025 Isn't What You Think

When people hear "on-premise," they often picture the 2005 version: server rooms, hardware procurement cycles, dedicated IT teams managing patches and uptime. That model still exists, but it's not what modern on-premise looks like for most enterprises.

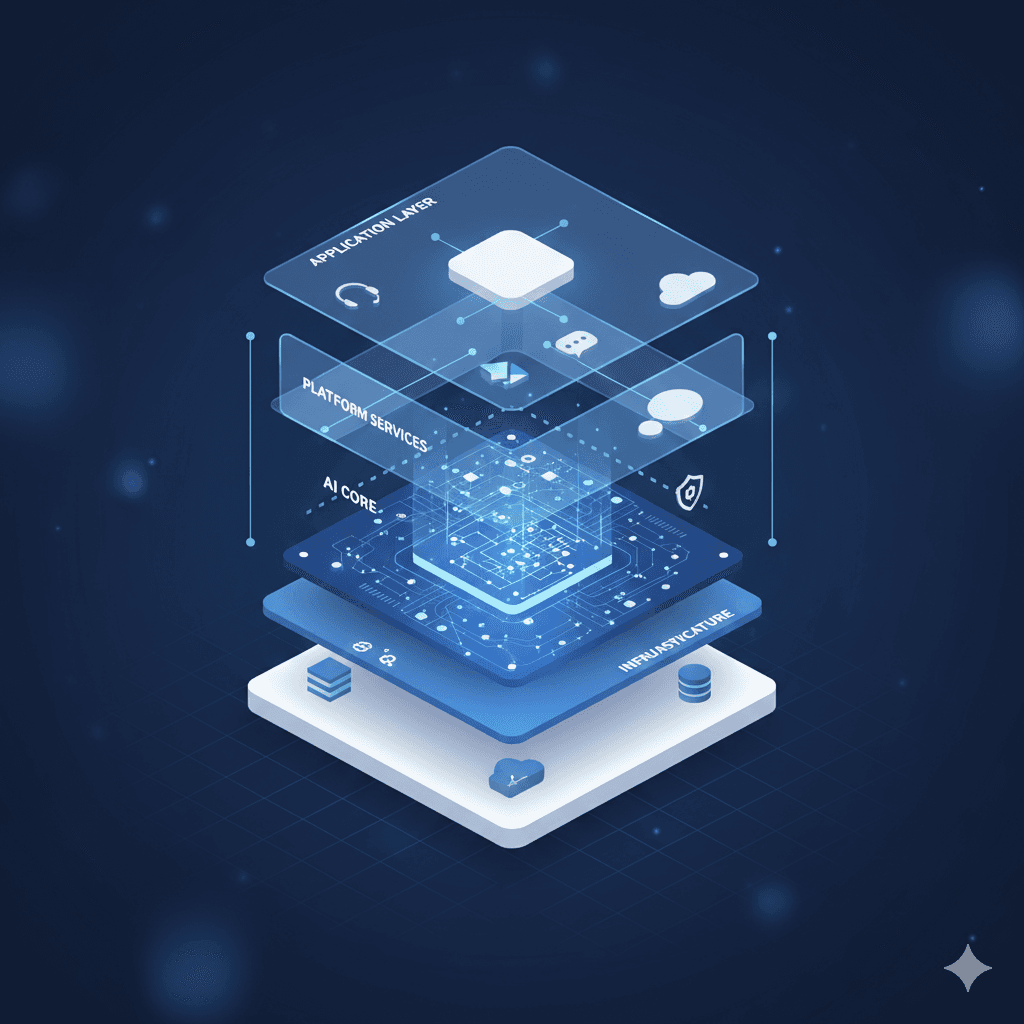

Today's on-premise is containerised. A vendor ships a Docker image. You deploy it in your private cloud, your data centre, or your air-gapped environment. The application runs within your network boundary, on infrastructure you control, subject to your security policies.

The operational model has changed too. Many vendors now offer managed services for on-premise deployments - they handle updates, monitoring, and support while you retain control of the infrastructure and data. You get the compliance benefits of on-premise without the operational burden of running it yourself.

This hybrid model - on-premise infrastructure with managed operations - is what's driving the resurgence.

What to Look for in an On-Premise Voice AI Platform

Not all voice AI platforms are built for on-premise deployment. Many were architected cloud-first and offer on-premise as an afterthought - if at all. If you're evaluating platforms for an on-premise or hybrid deployment, here's what matters:

Deployment flexibility. Can you deploy in your private cloud, your own data centre, or both? Is the containerisation clean, or are there hidden dependencies on the vendor's infrastructure?

Provider independence. The best platforms act as an orchestration layer. You should be able to bring your own TTS, LLM, and telephony providers - including on-premise versions of those services. If you're locked into the vendor's model choices, you've just traded one control problem for another.

Managed operations. Unless you have a dedicated team for this, look for a vendor that will deploy into your environment, handle updates, and provide support - without requiring access to your data.

Compliance alignment. The platform should make your compliance posture easier, not harder. That means clear documentation, audit-ready architecture, and experience with the frameworks that matter to you - whether that's APRA, HIPAA, SOC 2, or something else.

The Bottom Line

The cloud isn't going anywhere. For most workloads, it remains the right choice. But voice AI - with its unique combination of sensitive data, regulatory exposure, and real-time processing requirements - is one of the workloads where enterprises are increasingly demanding options.

The companies building voice AI platforms have a choice: remain cloud-only and lose deals to compliance requirements, or build for deployment flexibility and meet enterprises where they are.

The smart money is on flexibility.

Trillet is a voice AI platform built for enterprise deployment flexibility - cloud, private cloud, or on-premise. Learn more about our on-premise deployment options .