On-Premise Voice AI Deployment via Docker

On-premise voice AI deployment via Docker enables enterprises to run conversational AI within their own infrastructure, maintaining full data sovereignty and meeting strict regulatory requirements.

For organizations in healthcare, financial services, and government sectors, cloud-only voice AI solutions present insurmountable compliance barriers. Data residency mandates, security policies, and regulatory frameworks often prohibit sensitive conversation data from leaving organizational boundaries. Docker-based on-premise deployment solves this by running the voice AI application layer entirely within your controlled environment.

For enterprises requiring on-premise voice AI deployment, contact the Trillet Enterprise team to discuss your infrastructure requirements and compliance needs.

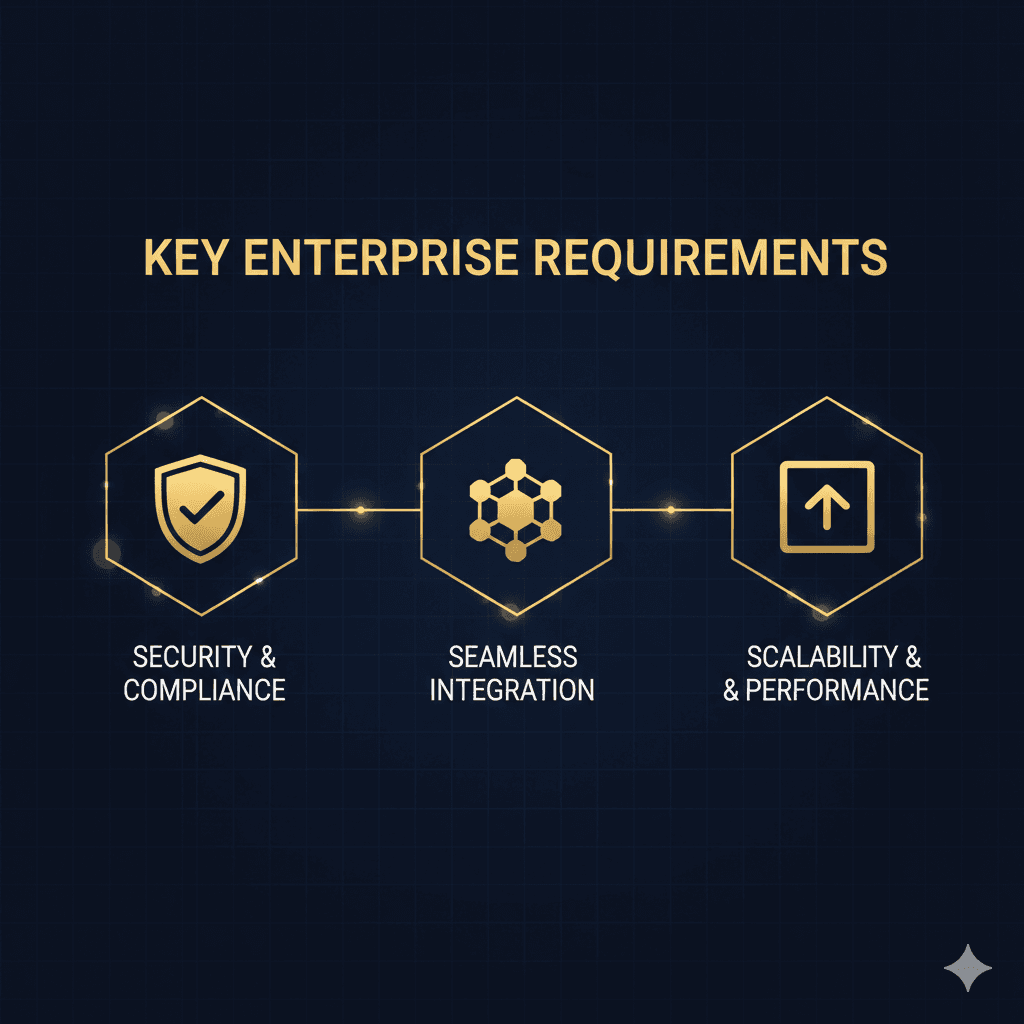

Why Do Enterprises Need On-Premise Voice AI?

On-premise deployment addresses three critical enterprise requirements: data sovereignty, regulatory compliance, and security control that cloud-only solutions cannot satisfy.

The shift toward on-premise voice AI reflects broader enterprise concerns about data control. According to industry surveys, 67% of enterprises cite data residency as a primary concern when evaluating AI solutions. For voice AI specifically, the stakes are higher - conversations contain personally identifiable information (PII), protected health information (PHI), financial data, and sensitive business discussions.

Key drivers for on-premise deployment include:

Regulatory mandates: HIPAA, APRA CPS 234, GDPR Article 44, and sector-specific regulations often require data to remain within specific geographic or organizational boundaries

Security policies: Many enterprises prohibit production data from traversing public internet connections or residing on third-party infrastructure

Audit requirements: On-premise deployment simplifies compliance auditing by eliminating third-party data processor relationships

Latency optimization: Local deployment eliminates internet round-trips, reducing voice AI response latency by 50-150ms in typical configurations

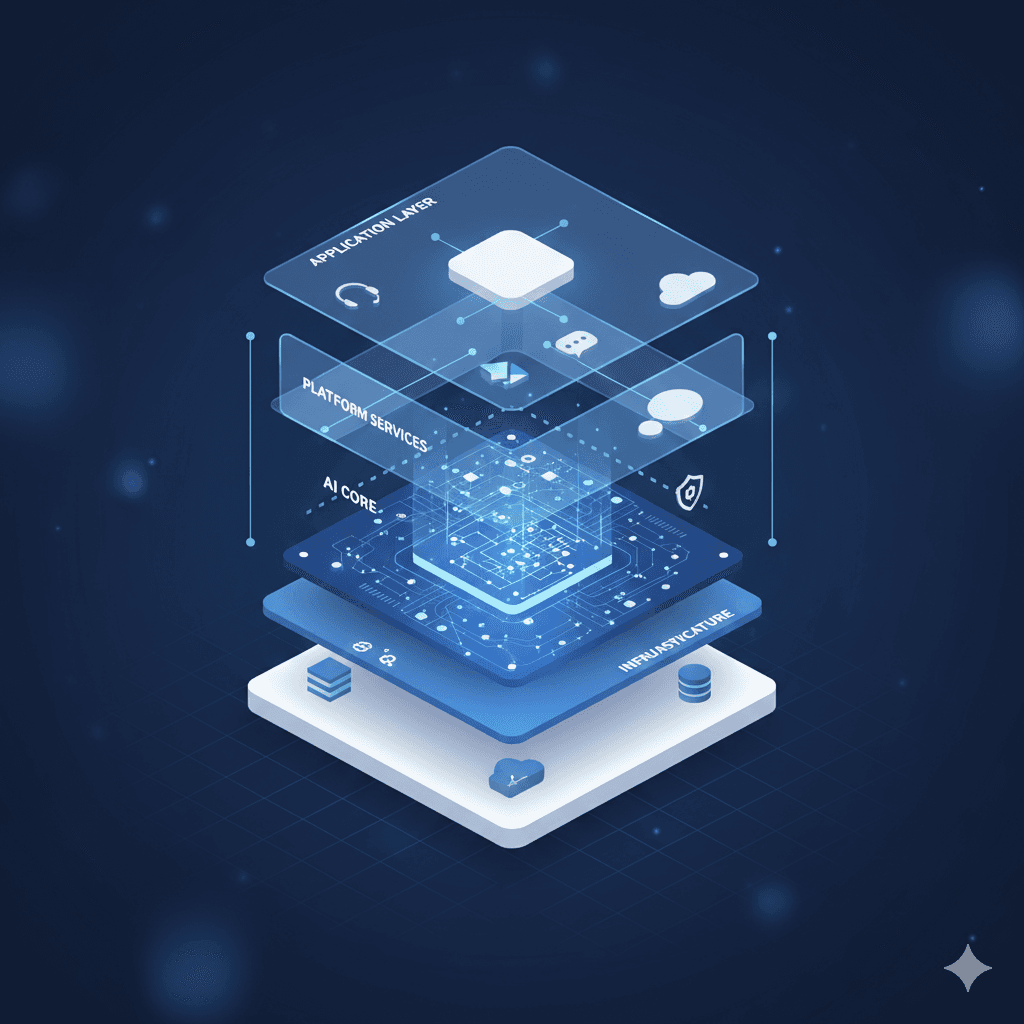

How Does Docker-Based Voice AI Deployment Work?

Docker containerization packages the voice AI application layer into portable, isolated units that run consistently across any infrastructure supporting container orchestration.

The architecture typically involves several containerized components:

Voice processing container: Handles real-time audio streaming, speech-to-text conversion, and text-to-speech synthesis

Inference container: Runs the large language model (LLM) or connects to your preferred AI provider

Telephony gateway: Manages SIP/PSTN connectivity and call routing

API gateway: Handles authentication, rate limiting, and integration endpoints

Data persistence layer: Stores conversation logs, analytics, and configuration (within your infrastructure)

Deployment options range from single-server configurations for pilot programs to Kubernetes-orchestrated clusters for enterprise-scale operations handling thousands of concurrent calls.

What Are the Technical Requirements for On-Premise Deployment?

Minimum infrastructure requirements depend on concurrent call volume, but typical enterprise deployments start with 8-core CPUs, 32GB RAM, and SSD storage with low-latency network connectivity.

Hardware requirements by scale:

Concurrent Calls | CPU Cores | RAM | Storage | Network |

1-50 | 8 cores | 32GB | 500GB SSD | 1 Gbps |

50-200 | 16 cores | 64GB | 1TB SSD | 10 Gbps |

200-500 | 32 cores | 128GB | 2TB NVMe | 10 Gbps |

500+ | Kubernetes cluster | Scaled | Distributed | Redundant |

Software prerequisites:

Docker Engine 20.10+ or containerd 1.6+

Kubernetes 1.25+ (for orchestrated deployments)

TLS certificates for encrypted communications

SIP trunk connectivity or PSTN gateway

Network configuration allowing required ports (SIP, RTP, HTTPS)

LLM considerations:

On-premise deployments can either:

Run local LLM inference (requires GPU infrastructure - NVIDIA A100 or H100 recommended)

Connect to cloud LLM providers via secure API calls (simpler but introduces external dependency)

Use hybrid approaches where conversation data stays local while inference calls are anonymized

How Does On-Premise Compare to Cloud Voice AI?

On-premise deployment trades operational simplicity for control, requiring dedicated infrastructure management while eliminating third-party data dependencies.

Factor | On-Premise (Docker) | Cloud-Only |

Data sovereignty | Full control - data never leaves your infrastructure | Data resides on provider servers |

Compliance | Simplified auditing - single party responsible | Requires vendor compliance attestation |

Latency | Lower (no internet round-trips) | Variable (depends on provider proximity) |

Scalability | Manual capacity planning required | Automatic scaling |

Uptime | Your responsibility | Provider SLA (typically 99.9-99.99%) |

Cost structure | CapEx + fixed OpEx | Variable usage-based |

Setup complexity | Higher (infrastructure required) | Lower (API integration) |

Maintenance | Internal team or managed service | Provider handles updates |

For organizations with existing data center operations and compliance requirements, on-premise deployment often represents lower total cost of ownership despite higher initial setup complexity. The elimination of per-minute cloud fees becomes significant at scale - enterprises processing 100,000+ minutes monthly typically see 40-60% cost reduction with on-premise infrastructure.

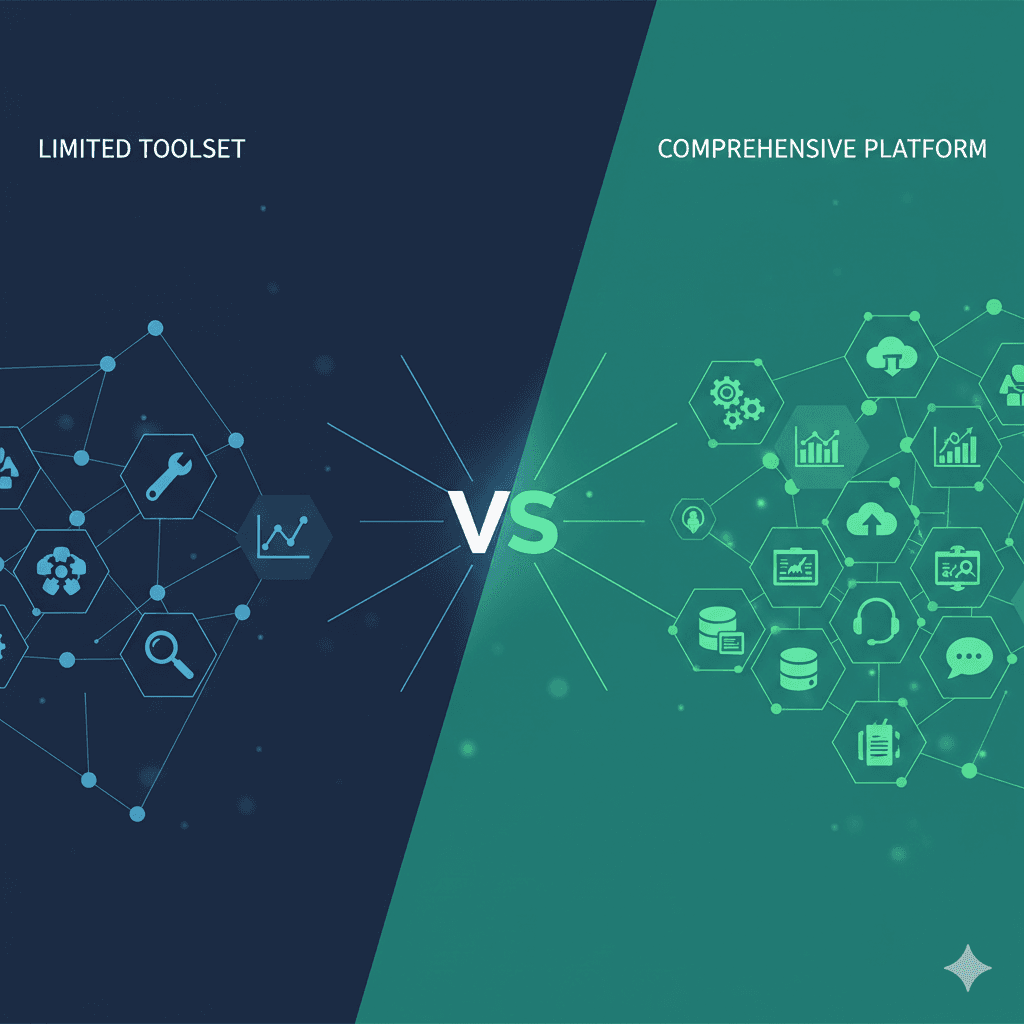

Which Voice AI Platforms Support On-Premise Deployment?

Trillet is currently the only voice AI platform offering true on-premise deployment via Docker, with the complete application layer running within customer infrastructure.

Most voice AI vendors operate exclusively as cloud services. Platforms like Retell AI, Vapi, and Synthflow require data to flow through their infrastructure - even "enterprise" tiers typically offer only dedicated cloud instances rather than on-premise options.

Platform comparison for on-premise capability:

Platform | On-Premise Option | Deployment Model |

Trillet Enterprise | Yes - Docker containers | Full application layer on-prem |

Retell AI | No | Cloud-only (dedicated instances available) |

Vapi | No | Cloud-only |

Synthflow | No | Cloud-only |

Five9 | Limited | Hybrid options for some components |

Genesys | Yes | Full on-prem (legacy architecture) |

Legacy contact center platforms like Genesys offer on-premise deployment but require significant infrastructure investment and lack modern conversational AI capabilities. Trillet bridges this gap by providing contemporary voice AI technology in a deployable container format.

What Security Benefits Does On-Premise Deployment Provide?

On-premise deployment eliminates entire categories of security risk by removing data transmission to external parties and enabling direct integration with existing security infrastructure.

Security advantages:

Network isolation: Voice AI runs within your security perimeter, behind existing firewalls and intrusion detection systems

Access control integration: Direct LDAP/Active Directory integration for user management

Encryption control: You manage encryption keys rather than trusting third-party key management

Audit logging: Complete visibility into all system access and data operations

Vulnerability management: Patch and update on your schedule, aligned with change management processes

Compliance implications:

For HIPAA-covered entities, on-premise deployment simplifies Business Associate Agreement (BAA) requirements - you're not sharing PHI with a third party if the voice AI runs entirely on your infrastructure. Similarly, for Australian enterprises subject to APRA CPS 234, on-premise deployment provides clearer accountability for information security controls.

The security model shifts from "trust the vendor" to "verify your own controls" - a trade-off that security-conscious enterprises often prefer.

What Does Implementation Timeline Look Like?

Enterprise on-premise voice AI deployment typically requires 6-8 weeks from contract to production, depending on infrastructure readiness and integration complexity.

Typical implementation phases:

Week 1-2: Infrastructure preparation

Provision hardware or allocate cloud resources

Configure networking and security groups

Establish SIP trunk connectivity

Week 2-4: Platform deployment

Deploy Docker containers

Configure authentication and access control

Integrate with telephony infrastructure

Week 4-6: Integration and training

Connect to CRM and legacy systems

Configure conversation flows and knowledge base

Train voice AI on organization-specific content

Week 6-8: Testing and go-live

User acceptance testing

Load testing at expected call volumes

Phased production rollout

Organizations with mature DevOps practices and existing container orchestration often compress this timeline. Conversely, complex legacy system integrations may extend the integration phase. Call centers running ViciDial or Asterisk-based dialers benefit from Trillet's production-proven AGI/AMI integration, which connects seamlessly with on-premise voice AI containers.

Frequently Asked Questions

Can on-premise voice AI integrate with cloud LLM providers?

Yes. Most on-premise deployments use hybrid architectures where conversation audio and transcripts remain local while inference calls to cloud LLMs (OpenAI, Anthropic, etc.) transmit only anonymized prompts. This approach balances data sovereignty with access to frontier AI models without requiring expensive local GPU infrastructure.

What happens if the on-premise system fails?

Enterprise deployments should implement high availability through container orchestration (Kubernetes), database replication, and redundant telephony connections. Properly configured systems achieve 99.99% uptime. Managed service providers like Trillet include 24/7 monitoring and proactive management as part of enterprise contracts.

How do I get started with on-premise voice AI deployment?

Contact Trillet Enterprise to discuss your specific requirements. The team will assess your infrastructure, compliance needs, and integration requirements to develop a deployment plan tailored to your organization.

Is on-premise more expensive than cloud voice AI?

Initial setup costs are higher, but total cost of ownership often favors on-premise at scale. Organizations processing 100,000+ monthly minutes typically see 40-60% lower ongoing costs compared to per-minute cloud pricing. The break-even point depends on call volume, existing infrastructure, and internal operations capabilities.

Can I run voice AI on existing virtualization infrastructure?

Docker containers run on VMware, Hyper-V, and other virtualization platforms. However, direct bare-metal or container-optimized infrastructure delivers better performance for real-time voice processing. Discuss your specific infrastructure with the Trillet Enterprise team to determine optimal deployment architecture.

Conclusion

On-premise voice AI deployment via Docker provides enterprises with the data sovereignty, security control, and compliance alignment that cloud-only solutions cannot offer. For organizations in regulated industries or those with strict data governance requirements, on-premise deployment is often the only viable path to voice AI adoption.

Trillet stands alone in offering true on-premise deployment - the complete voice AI application layer running within your infrastructure via Docker containers. Combined with zero-engineering-lift managed services, enterprises can achieve the benefits of on-premise deployment without building internal voice AI expertise.

Contact Trillet Enterprise to discuss your on-premise voice AI requirements and implementation timeline.

Related Resources:

Enterprise Voice AI Orchestration Guide - Complete guide for large organization deployments

Choosing Between Cloud, Hybrid, and On-Premise Voice AI - Deployment model comparison

Voice AI Integration with Legacy CRM and Telephony Systems - ViciDial and legacy system integration

The Return of On-Premise: Why Enterprises Are Rethinking Cloud-Only Voice AI - Analysis of the on-premise trend

Voice AI for Australian Enterprises: APRA CPS 234 and IRAP Compliance - Australian regulatory requirements

HIPAA Compliant Voice AI for Healthcare Enterprises - Healthcare-specific deployment considerations