Choosing Between Cloud, Hybrid, and On-Premise Voice AI

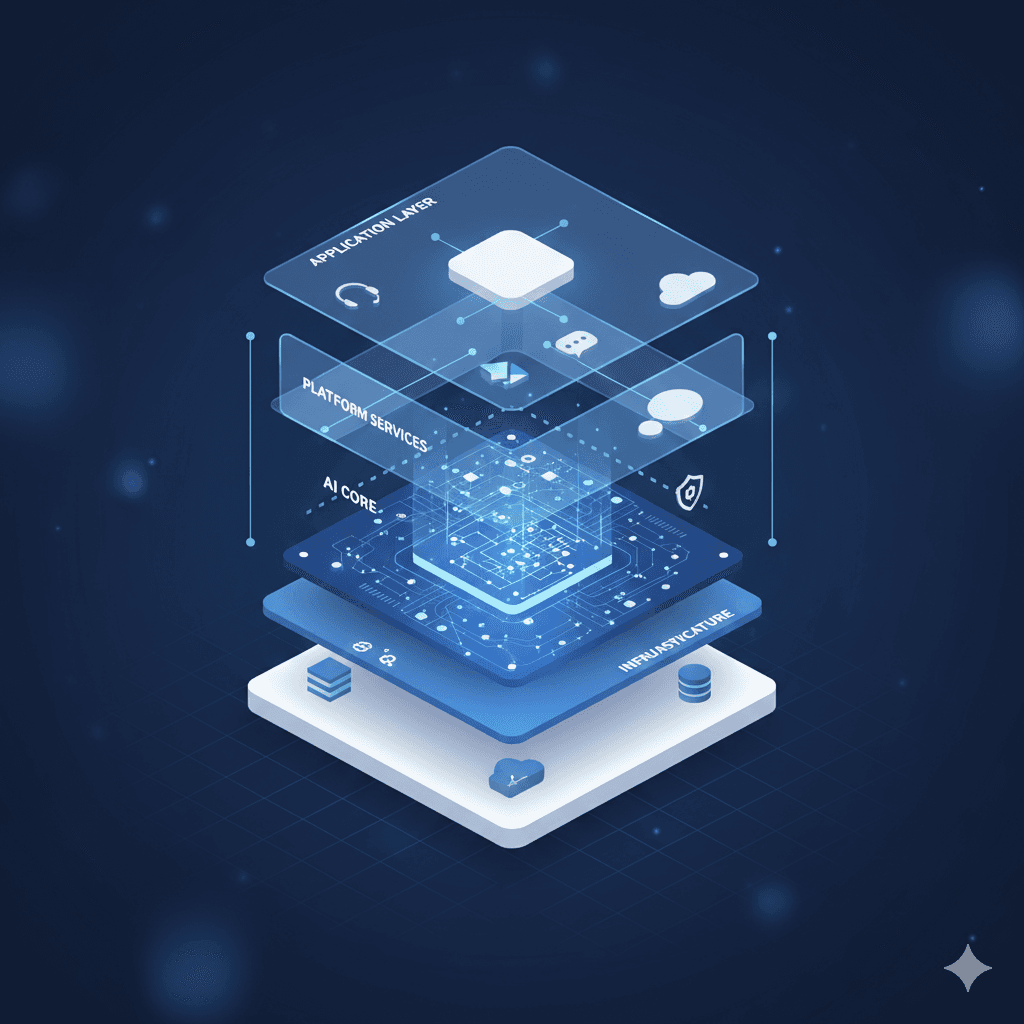

Enterprise voice AI deployment models range from fully cloud-hosted to completely on-premise, with hybrid architectures offering middle-ground flexibility for organizations balancing performance, compliance, and control.

The deployment architecture decision shapes everything that follows: data residency compliance, latency characteristics, operational complexity, and long-term costs. Most voice AI platforms lock enterprises into cloud-only deployment, but regulated industries and organizations with strict data sovereignty requirements need alternatives. This guide examines the technical and business trade-offs across all three deployment models.

For enterprises evaluating deployment options, contact the Trillet Enterprise team to discuss which architecture fits your compliance and operational requirements.

What Defines Each Deployment Model?

Understanding the fundamental architecture of each model clarifies their distinct trade-offs.

Cloud Deployment

Cloud-hosted voice AI runs entirely on the vendor's infrastructure. Voice traffic routes to vendor data centers, AI processing happens on shared or dedicated cloud resources, and all data storage occurs within the vendor's cloud environment.

Characteristics:

Zero infrastructure management for the customer

Vendor handles scaling, updates, and maintenance

Data resides in vendor-controlled cloud regions

Typically multi-tenant architecture (shared resources)

Fastest deployment timeline

On-Premise Deployment

On-premise voice AI runs within the customer's own data center or private cloud. The voice AI application layer deploys on customer-controlled infrastructure, with all voice traffic and data remaining within the organization's network boundary.

Characteristics:

Complete data sovereignty and control

Customer manages infrastructure and updates

Air-gapped deployment possible for highest security

Single-tenant by definition

Longest deployment timeline but maximum control

Hybrid Deployment

Hybrid architectures split components across cloud and on-premise environments. Common patterns include on-premise voice processing with cloud-based AI inference, or on-premise data storage with cloud-hosted application logic.

Characteristics:

Balances control and convenience

Complexity varies based on split architecture

Requires integration between environments

Can optimize for specific compliance requirements

Medium deployment timeline

Technical Trade-Offs: Latency, Reliability, and Performance

Voice AI has uniquely demanding performance requirements. Conversational latency directly impacts caller experience, and any architectural choice affects end-to-end response times.

Latency Considerations

Deployment Model | Typical Latency Impact | Key Factors |

Cloud | Variable (100-300ms network overhead) | Geographic distance to data center; internet quality; shared resource contention |

On-Premise | Lowest (sub-50ms network overhead) | Local network only; no internet traversal for voice traffic |

Hybrid | Moderate (50-200ms depending on split) | Which components are remote; optimization of cloud-premise connection |

For organizations with callers geographically distant from vendor cloud regions, on-premise deployment eliminates the latency tax of cross-continent voice traffic. A contact center in Sydney connecting to US-based cloud infrastructure adds 150-200ms of round-trip latency before AI processing even begins.

Reliability Architecture

Cloud reliability depends on:

Vendor SLA guarantees (typically 99.9-99.99%)

Multi-region failover capabilities

Customer's internet connectivity to cloud

Shared infrastructure resilience

On-premise reliability depends on:

Customer data center redundancy

Internal network architecture

Customer operational capabilities

Hardware maintenance and replacement

Hybrid reliability introduces:

Dependency on both environments

More potential failure points

Complex failover logic

Integration layer as potential bottleneck

Organizations with mature data center operations may achieve higher reliability on-premise than cloud alternatives offer. Those without dedicated infrastructure teams typically achieve better reliability with managed cloud services.

Scalability Patterns

Cloud deployment excels at elastic scaling. Handling 100 concurrent calls Monday morning and 10,000 during a marketing campaign requires no infrastructure planning in cloud models.

On-premise deployment requires capacity planning. Infrastructure must be provisioned for peak load, meaning resources sit idle during low-traffic periods. However, capacity is guaranteed without contention from other tenants.

Hybrid models can use cloud for burst capacity while maintaining on-premise for baseline load, optimizing cost while ensuring capacity for traffic spikes.

Compliance and Data Sovereignty Requirements

For regulated industries, deployment architecture often determines compliance feasibility.

Data Residency

Many regulations mandate where data can be processed and stored:

GDPR (EU): Data processing must comply with EU data protection standards; transfers outside EU require specific safeguards

APRA CPS 234 (Australia): Financial institutions must maintain information security capabilities; offshore data requires board approval

HIPAA (US): Protected health information requires specific safeguards; cloud BAAs must be in place

Government/Defense: Often require data to remain within national boundaries or specific classified environments

Cloud deployment satisfies these requirements only if the vendor offers data centers in compliant regions. Many voice AI vendors operate from limited geographic locations, creating compliance gaps for international enterprises.

On-premise deployment provides complete data residency control. Data never leaves the organization's infrastructure, simplifying compliance documentation and audit responses.

Audit and Inspection Rights

Some regulatory frameworks require audit rights over systems processing sensitive data. Cloud deployments complicate this with shared infrastructure and vendor access restrictions.

On-premise deployments allow unrestricted audit access. Security teams can inspect any component, penetration testers can operate without vendor coordination, and compliance auditors can examine infrastructure directly.

Air-Gapped Requirements

Government agencies, defense contractors, and critical infrastructure operators may require air-gapped deployment with no external network connectivity.

Only on-premise deployment supports true air-gapped operation. Cloud and hybrid models require internet connectivity by definition.

Cost Analysis Across Deployment Models

Total cost of ownership varies significantly based on scale, duration, and internal capabilities.

Cloud Cost Structure

Advantages: No upfront capital expenditure; predictable per-minute or per-seat pricing; no infrastructure staff required

Disadvantages: Ongoing operational expenditure indefinitely; costs scale linearly with usage; premium pricing for dedicated resources

Best for: Organizations without data center infrastructure; variable or unpredictable call volumes; rapid deployment requirements

On-Premise Cost Structure

Advantages: Lower long-term operational costs at scale; capital investment amortizes over time; no per-minute vendor fees

Disadvantages: Significant upfront infrastructure investment; requires operational staff; capacity planning complexity

Best for: High-volume deployments (millions of minutes annually); existing data center infrastructure; long-term strategic investment

Hybrid Cost Structure

Advantages: Optimize specific workloads for cost; use cloud for variable capacity; keep high-volume baseline on-premise

Disadvantages: Complexity of dual management; integration costs; potential for cost optimization to become cost confusion

Best for: Large organizations with sophisticated FinOps practices; workloads with distinct cloud-optimal and premise-optimal components

Break-Even Analysis

At enterprise scale (1M+ minutes annually), on-premise deployment typically achieves cost parity with cloud within 18-24 months and delivers ongoing savings thereafter. The break-even point depends on:

Internal labor costs for infrastructure management

Existing data center capacity and utilization

Cloud pricing and committed use discounts

Hardware refresh cycles and depreciation

The On-Premise Challenge: Most Platforms Cannot Do It

A critical market reality shapes enterprise options: the vast majority of voice AI platforms offer cloud-only deployment. Their architectures were built cloud-native with no consideration for on-premise requirements.

This limitation creates genuine problems for:

Financial institutions with strict data residency mandates

Healthcare organizations requiring PHI containment

Government agencies with sovereignty requirements

Defense and intelligence contractors

Multinational corporations with varying regional requirements

Trillet is the only voice application layer that can be hosted on-premise via Docker. This capability emerged from enterprise requirements rather than being retrofitted, enabling true data sovereignty without sacrificing AI capabilities.

What On-Premise Deployment Requires

Not all "on-premise" claims are equal. True on-premise deployment means:

Voice AI application layer runs on customer infrastructure

Voice traffic never leaves customer network

Data storage occurs on customer-controlled systems

No dependency on external services for core functionality

Customer controls update and maintenance timing

Some vendors claim "private cloud" or "dedicated instance" as on-premise alternatives. These are not equivalent. Dedicated cloud instances still process data on vendor infrastructure, even if resources are not shared with other tenants.

Making the Deployment Decision

The optimal deployment model depends on organizational context rather than technical superiority of any single approach.

Choose Cloud When

Compliance requirements permit cloud data processing

Organization lacks data center infrastructure or expertise

Call volumes are variable or unpredictable

Speed to deployment is the primary constraint

Operational simplicity outweighs control requirements

Choose On-Premise When

Regulations mandate data residency within organizational control

Air-gapped deployment is required

Call volumes justify infrastructure investment (typically 1M+ minutes/year)

Organization has mature data center operations

Long-term cost optimization is prioritized

Choose Hybrid When

Different workloads have different compliance requirements

Burst capacity needs exceed practical on-premise provisioning

Organization wants to migrate gradually from cloud to on-premise

Specific AI capabilities require cloud while voice processing stays local

Comparison: Deployment Capabilities Across Voice AI Platforms

Capability | Trillet Enterprise | Typical Cloud Platform | DIY (Retell/Vapi) |

Cloud deployment | Available | Yes | Yes |

On-premise deployment | Docker-based, fully supported | Not available | Not available |

Hybrid architecture | Flexible configuration | Limited | Build yourself |

Data residency regions | APAC, EMEA, NA + on-premise | Limited regions | Provider-dependent |

Air-gapped support | Yes | No | No |

Managed updates (on-prem) | Included in service | N/A | Self-managed |

Frequently Asked Questions

Can I switch deployment models after initial implementation?

Migration between deployment models is possible but not trivial. Cloud to on-premise requires infrastructure provisioning and data migration. On-premise to cloud requires compliance re-evaluation. Plan for the long term, but know that migration paths exist. Trillet Enterprise supports migration assistance for organizations whose requirements evolve.

What infrastructure does on-premise deployment require?

On-premise voice AI typically requires container orchestration (Kubernetes or Docker Swarm), compute resources scaled to concurrent call capacity, and storage for configuration and logging. Trillet's Docker-based deployment minimizes infrastructure complexity while supporting enterprise-grade deployments. Contact Trillet Enterprise for infrastructure sizing guidance.

How does on-premise deployment handle AI model updates?

On-premise deployments receive AI model updates through managed distribution channels. Updates can be staged and tested before production deployment, giving enterprises control over change management while maintaining access to improved AI capabilities. Trillet Enterprise manages update distribution with customer-controlled deployment timing.

What happens if cloud connectivity fails in a hybrid deployment?

Well-architected hybrid deployments include graceful degradation. On-premise components should continue functioning independently during cloud outages, potentially with reduced AI capabilities. Critical voice handling and data capture continue while cloud-dependent features queue for later processing.

Conclusion

Deployment model selection fundamentally shapes enterprise voice AI outcomes. Cloud deployment offers operational simplicity and rapid deployment. On-premise provides maximum control and compliance flexibility. Hybrid architectures balance both approaches for complex requirements.

For regulated industries and organizations with strict data sovereignty mandates, on-premise capability is not optional. Trillet remains the only voice AI platform offering true on-premise deployment via Docker, enabling enterprises to deploy voice AI within their own infrastructure while maintaining full AI capabilities.

Explore Trillet Enterprise for deployment architecture consultation, or review the Enterprise Voice AI Orchestration Guide for comprehensive deployment planning.

Related Resources:

Voice AI 99.99% Uptime SLA Requirements - Reliability architecture by deployment model

Enterprise Voice AI Vendor Evaluation Framework - Systematic vendor assessment criteria

Voice AI for Australian Enterprises: APRA CPS 234 and IRAP Compliance