Voice AI Quality Assurance Monitoring: What Agencies Need to Know in 2026

Voice AI quality assurance requires real-time call monitoring, automated scoring, and client-facing dashboards to maintain service standards and reduce churn across your agency's portfolio.

Deploying voice AI agents for clients is only half the battle. The agencies that retain clients long-term are those that proactively monitor quality, catch issues before clients notice, and demonstrate measurable value through data. Without a quality assurance framework, you're flying blind and waiting for complaints instead of preventing them.

Which Trillet product is right for you?

Small businesses: Trillet AI Receptionist - 24/7 call answering starting at $29/month

Agencies: Trillet White-Label - Studio $99/month or Agency $299/month (unlimited sub-accounts)

What is Voice AI Quality Assurance?

Voice AI quality assurance is the systematic process of monitoring, evaluating, and improving AI agent performance across calls to ensure consistent service delivery.

Unlike traditional call center QA that relies on random sampling and manual review, voice AI QA leverages automated analysis at scale. Every call can be evaluated against objective criteria: response accuracy, latency, sentiment detection, successful appointment bookings, and proper call routing. This transforms QA from a reactive spot-check into a proactive early warning system.

For agencies managing multiple client accounts, QA monitoring serves three critical functions:

Issue detection - Catch problems before clients report them

Performance optimization - Identify training gaps and conversation flow improvements

Value demonstration - Prove ROI with concrete metrics

What Metrics Should Agencies Track for Voice AI Quality?

The core metrics for voice AI quality fall into five categories: responsiveness, accuracy, outcomes, sentiment, and technical performance.

Responsiveness Metrics:

First response latency - Time from caller speech end to AI response start (target: under 600ms for natural conversation flow)

Average handling time - Total call duration compared to benchmarks

Queue wait time - Time before AI picks up (should be near-instant)

Accuracy Metrics:

Intent recognition rate - Percentage of caller intents correctly identified

Information accuracy - Correctness of business details provided (hours, services, pricing)

FAQ resolution rate - Percentage of common questions answered without escalation

Outcome Metrics:

Appointment booking rate - Calls that result in scheduled appointments

Lead capture rate - Valid contact information collected

Transfer success rate - Calls correctly routed to humans when needed

Call completion rate - Calls that reach natural conclusion vs. hang-ups

Sentiment Metrics:

Caller satisfaction indicators - Positive/negative language detection

Frustration markers - Repeated questions, raised voice, profanity

Conversation flow quality - Natural back-and-forth vs. awkward pauses

Technical Metrics:

Uptime percentage - Service availability across time periods

Call quality scores - Audio clarity, connection stability

Integration success rates - Calendar bookings that sync, CRM updates that complete

How Do You Set Up Automated QA Monitoring?

Effective voice AI QA monitoring requires three components: data collection infrastructure, automated scoring rules, and alert thresholds.

Step 1: Configure Data Collection

Ensure your voice AI platform captures complete call data:

Full call recordings (with appropriate consent and compliance)

Transcriptions with timestamps

Metadata: caller ID, time, duration, outcome codes

Integration events: calendar bookings, CRM updates, SMS sends

Trillet's white-label platform automatically captures this data for every call across all client sub-accounts, making it accessible through both the dashboard and API.

Step 2: Define Scoring Criteria

Create objective rubrics for evaluating calls:

Criteria | Weight | Scoring Method |

Response latency | 20% | <600ms = 100%, 600-1000ms = 75%, >1000ms = 50% |

Intent recognition | 25% | Correct = 100%, Partial = 50%, Incorrect = 0% |

Outcome achieved | 30% | Booking/Lead = 100%, Information provided = 75%, Hang-up = 25% |

Caller sentiment | 15% | Positive = 100%, Neutral = 75%, Negative = 25% |

Technical quality | 10% | No issues = 100%, Minor issues = 75%, Major issues = 0% |

Step 3: Set Alert Thresholds

Configure notifications before problems become client complaints:

Critical alerts: Latency exceeding 2 seconds, system errors, integration failures

Warning alerts: Booking rates dropping 20%+ from baseline, negative sentiment spikes

Weekly digests: Performance trends, optimization opportunities

What Should Client-Facing QA Reports Include?

Client reports should demonstrate value, not overwhelm with data. Focus on outcomes and improvements.

Essential Report Components:

Executive Summary - One-paragraph performance overview with key wins

Call Volume Metrics - Total calls handled, peak times, after-hours coverage

Outcome Metrics - Appointments booked, leads captured, issues resolved

Quality Scores - Overall score trend with month-over-month comparison

Notable Calls - Examples of complex situations handled well

Optimization Actions - What you improved this period based on QA findings

Next Steps - Planned improvements for the coming period

What to Exclude:

Raw technical logs

Every individual call score

Platform-specific jargon

Metrics without context or benchmarks

The goal is demonstrating that your agency actively manages and improves their voice AI, not passive deployment.

How Does Trillet Support Agency QA Workflows?

Trillet's white-label platform includes built-in QA capabilities designed for agencies managing multiple client accounts.

Centralized Dashboard:

View all client accounts from a single interface

Filter by date range, outcome type, quality scores

Drill down from portfolio overview to individual calls

Automated Analytics:

Call transcriptions with searchable text

Sentiment analysis on every call

Outcome tracking: bookings, leads, transfers, hang-ups

Integration success monitoring

Alert Configuration:

Custom thresholds per client account

Multi-channel notifications: email, Slack, SMS

Escalation rules for critical issues

Reporting Tools:

White-labeled reports with your agency branding

Scheduled automated report delivery to clients

Export capabilities for custom analysis

API Access:

Full data access for custom dashboards

Webhook notifications for real-time monitoring

Integration with third-party analytics tools

This eliminates the need to build QA infrastructure from scratch, allowing agencies to focus on client relationships rather than data plumbing.

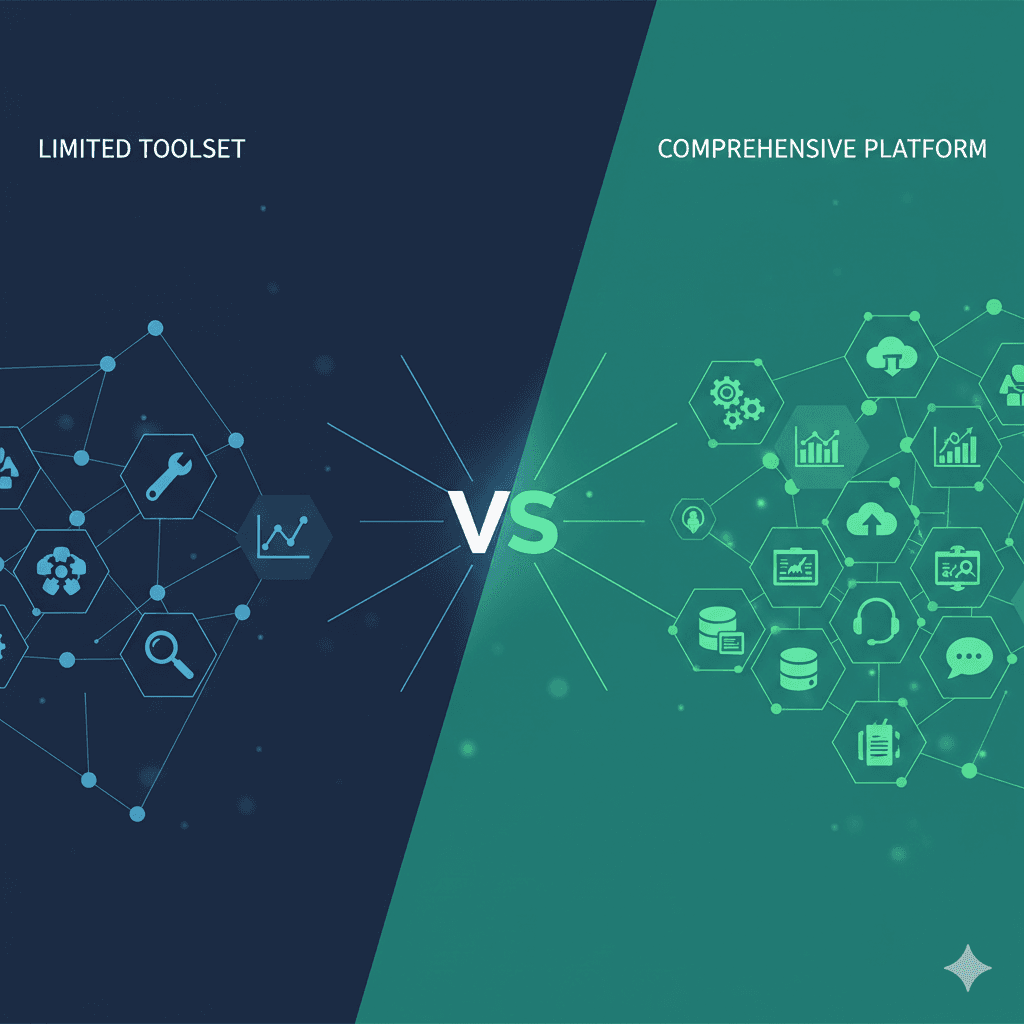

Comparison: QA Capabilities Across Voice AI Platforms

Feature | Trillet | Synthflow | VoiceAIWrapper |

Call recordings | Included | Included | Provider-dependent |

Transcriptions | Included | Included | Provider-dependent |

Sentiment analysis | Native | Add-on | Not available |

Multi-account dashboard | Yes | Yes | Limited |

Custom alert thresholds | Yes | Limited | No |

White-labeled reports | Yes | Extra cost | No |

API data access | Full | Limited | Provider-dependent |

Agency pricing | $299/month | $1,250+/month | $299/month |

Trillet's native platform provides comprehensive QA tools at agency-friendly pricing, while wrapper platforms often require additional subscriptions for similar capabilities.

What Are Common Voice AI Quality Issues and How Do You Fix Them?

Issue: High latency causing unnatural pauses

Symptoms: Callers talking over the AI, frustrated "hello?" repetitions

Root causes:

Complex conversation flows adding processing time

Knowledge base too large or poorly organized

Integration timeouts slowing responses

Fixes:

Simplify conversation logic

Optimize knowledge base structure

Set integration timeout limits with fallbacks

Issue: Incorrect information provided to callers

Symptoms: Client complaints about wrong hours, pricing, or service details

Root causes:

Outdated training data

Conflicting information sources

Website content changes not synced

Fixes:

Regular knowledge base audits (monthly minimum)

Single source of truth for business information

Automated sync with client websites where possible

Issue: Low appointment booking rates

Symptoms: Calls handled but outcomes below benchmarks

Root causes:

Calendar integration misconfigured

Booking flow too complex

AI not prompting for appointments

Fixes:

Verify calendar sync end-to-end

Reduce booking steps to minimum required

Adjust conversation prompts to suggest appointments naturally

Issue: High transfer/escalation rates

Symptoms: Too many calls going to humans, defeating AI purpose

Root causes:

Training gaps for common scenarios

Escalation triggers too sensitive

Client expectations misaligned

Fixes:

Review transferred call transcripts for training opportunities

Adjust escalation thresholds

Clarify AI scope with client

Frequently Asked Questions

How often should agencies review voice AI quality metrics?

Review high-level metrics weekly and conduct deep-dive analysis monthly. Set up automated alerts for critical issues so you catch problems immediately, but avoid constant monitoring that leads to alert fatigue.

What quality score should agencies target for client voice AI?

Target a composite quality score of 85% or higher. Scores between 75-85% indicate optimization opportunities. Scores below 75% require immediate attention. These benchmarks may vary by industry and call complexity.

Which Trillet product should I choose?

If you're a small business owner looking for AI call answering, start with Trillet AI Receptionist at $29/month. If you're an agency wanting to resell voice AI to clients, explore Trillet White-Label—Studio at $99/month (up to 3 sub-accounts) or Agency at $299/month (unlimited sub-accounts).

Can agencies use QA data to justify pricing increases?

Yes. Documented quality improvements and measurable business outcomes (leads captured, appointments booked) provide concrete justification for value-based pricing. Agencies with robust QA programs typically achieve higher retention and can command premium rates.

How do you handle clients who want access to raw call data?

Trillet's white-label platform supports client-level dashboard access with appropriate permissions. You control what clients see, from full call recordings to summary reports only. Discuss data access expectations during onboarding to avoid surprises.

Conclusion

Voice AI quality assurance separates agencies that churn clients from those that build lasting partnerships. The fundamentals are straightforward: track the right metrics, automate monitoring, catch issues early, and demonstrate value through data.

Trillet's white-label platform at $299/month includes the QA infrastructure agencies need without requiring separate analytics subscriptions or custom development. Combined with unlimited sub-accounts and $0.09/minute pricing, agencies can scale their voice AI practice while maintaining quality standards.

Ready to explore how Trillet's QA capabilities can strengthen your agency's voice AI offering? Visit Trillet White-Label pricing to see the full feature set.

Related Resources: